#DALMOOC’s week 1 competency 1.2 gave me an excuse explore some definitions.

As a scientist, the insistence on using the term “analytics” as opposed to “analysis” I found intriguing…The trusty Wikipedia explained that analytics is “the discovery and communication of meaningful patterns in data” and has its roots in business. It is something wider (but not necessarily deeper) than data analysis/statistics as I am used to. Much of it is focused on visualisation to support decision-making based on large and dynamic datasets – I imagine producing visually appealing and convincing powerpoint slides for your executive meeting would be one potential application…

I was glad to discover that there are some voices out there which share my concern over the seductive powers of attractive and simple images (and metrics) – here is a discussion of LA validity on the EU-funded LACE project website; and who has not heard about the issues with Purdue’s Course Signals retention predictions? Yet makers of tools such as Tableau (used in week 2 of this course) emphasise how little expertise one needs to use them to look at the data via the “visual windows”… The old statistical adage still holds – “garbage in – garbage out” (even though some evangelists might claim that in the era of big data statistics itself might be dead;). That’s enough of the precautionary rant…;)

I liked McNeill and co.’s choice of Cooper’s definition of analytics in their 2014 learning analytics paper (much of it based on CETIS LA review series):

Analytics is the process of developing actionable insights through problem definition and the application of statistical models and analysis against existing and/or simulated future data (my emphasis)

It includes the crucial step in looking at any data in applied contexts – simply asking yourself what you want to find out and change as a result of looking at it (the “problem definition”). And the “actionable insights” – a rather offensive management speak to my ears – but nonetheless doing something about it seems rather an essential step in closing any learning loop.

The, currently, official definition of Learning Analytics came out of an open online course in Learning and Knowledge Analytics 2011 and was presented at the 1st LAK conference (Clow, 2013):

“LA is the measurement, collection, analysis and reporting of data about learners and their contexts, for purposes of understanding and optimising learning and the environments in which it occurs.”

This is the definition used in the course – the definition we are encouraged to examine and redefine as this very freshly minted field is dynamically changing its shape.

Instantly I liked how the definition is open on two fronts (although that openness seems to be largely in the realm of aspirations than IRL practice, but is not surprising, given the definition’s origins):

1. It does not restrict data collection to the digital traces left by the student within Learning Management Systems/Virtual Learning Environments so it remains open to data from entirely non-digital contexts. Although in reality, the field really grew out of and, from what I can see, largely remains in the realm of big data generated by ‘clicks’ (whether it be VLEs or games or intelligent tutoring systems). The whole point really is that it relies on data collected effortlessly (or economically) – compared to traditional sources of educational data, such as exams. What really sent a chill down my spine is the idea fleetingly mentioned by George Siemens in his intro lecture for this week – extending the reach outside of the virtual spaces via wearable tech…. So as I participate in the course I will be looking out for examples of marrying the data from different sources. I will also look out for dangers/side effects of focusing on what can be measured rather than what should be measured. I feel that the latter may be enhanced by limiting a range of perspectives involved in development and application of LA (to LA experts and institutional administrators). So keeping an eye out for collaborative work on defining metrics/useful data between LA and educational experts/practitioners, and maybe even LDs is also on the list (Found one neat example already via LACE SOLAR flare UK meet, which nicely coincided with week 1 – Patricia Charlton speaks for 3 min about the mc2 project starting at 4.40 min. The project helps educators to articulate many implicit measures used to judge student’s mathematical creativity. Wow – on so many levels!).

2. It is open to who is in control of data collection (or ownership) and use – institution vs the educator and the learner. I was going to say something clever here as well but it’s been so long since I started this post, it’s all gone out of my head (or just moved on). I found another quote from McNeill and co., what is relevant here:

Different institutional stakeholders may have very different motivations for employing analytics and, in some instances, the needs of one group of stakeholders, e.g. individual learners, may be in conflict with the needs of other stakeholders, e.g. managers and administrators.

It sort of touches on what I said under point 1 – need for collaboration within an institution when applying LA. But it also highlights the students as voices of importance in LA metric development and application. It is their data after all so they should be able to see it (and not just give permission for others to use it) and they are supposed to learn how to self-regulate their learning and all…Will I be able to see many IRL examples of such tools made available to students and individual lecturers (beyond the simple warning systems for failing students such as Course Signals)? There was a glimmer of hope for this from a couple of LACE SoLAR flare presentations. Geoffrey Bonin talked about Pericles project and how it is working to provide a recommendation system for open resources in students’ personal learning environments (at 1 min here). Or rather more radical, Marcus Elliot (Uni of Lincoln) working on a Weapons of Mass Education project to develop a student organiser app going beyond institution giving students access to the digested data and involving research project around student perceptions around learning analytics and what institutional and student-collected data they find most useful – data analytics with students not for students (at 25 min here).

(I found Doug Clow’s analysis of LA in UK HE re: institutional politics and power play in learning very helpful here and it was such a pleasant surprise to hear him speak in person at the LACE Solar flare event!)

The team’s perspective on the LA definition was presented in the weekly hangout (not surprisingly, everybody had their own flavour to add) – apologies for any transcription/interpretation errors:

- Carolyn (the text miner of forums): Defined LA as different to other forms of Data Mining as focussing on the learning process and learner’s experiences. Highlighted the importance of correct representation of the data/type of data captured for the analysis to be meaningful in this context vs e.g. general social behaviours.

- Dragan (social interaction/learning explorer): LA is something that helps us understand and optimise learning and is an extension (or perhaps replacement) of the existing things that are done in education and research, e.g. end of semester questionnaires no longer necessary as can see all ‘on the go’. Prediction of student success is one of the main focuses of LA but it is more subtle – aimed at personalising learning support for success of each individual.

- Ryan (the hard-core data miner who came to the DM table waaay ahead of the first SOLAR meet in 2011, his seminal 2009 paper on EDM is here): LA is figuring out what we can learn about learners, learning and settings they are learning in to try to make it better. LA is about beyond providing automated responses to students but LA also focuses on including stakeholders (students, teachers and others) in communication of the findings to them.

So – a lot of insistence on focus on learners and learning…implying that there are in fact some other analytics foci in education. I just HAD TO have a little peak at the literature around the history of this field to better understand the context and hence the focus of the LA itself (master of procrastination reporting for duty!).

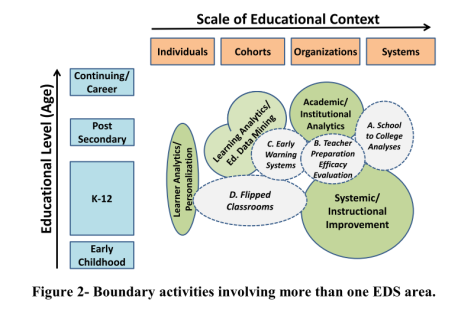

Since I have gone beyond the word count that any sensible co-learner may be expected to read, I will use a couple of images which do illustrate key points rather more concisely.

Long and Siemens’ Penetrating the fog – analytics in learning and education provides a useful differentiation between learning analytics and academic analytics, the latter being closer to business intelligence at the insititutional level (this roughly maps onto the hierarchical definition of analytics in education by Buckingham and Shum in their UNESCO paper – PDF):

I appreciate the importance of such “territorial” subject definitions, especially in such an emerging field, with the potential of being kidnapped by educational economic efficiency agenda prevailing these days. However, having had an experience of running courses within HE institutions, I feel that student experience and learning can be equally impacted by BOTH, the institutional administration processes/policy decisions AND the quality of teaching,course design and content. So I believe that joined up thinking across analytics “solutions” at all the scales should really be the holy grail here (however unrealistic;). After all there is much overlap in how the same data can be used at the different scales already. For that reason I like the idea of unified concept of Educational Data Sciences, with 4 subfields, as proposed by Piety, Hickey and Bishop in Educational data sciences – framing emergent practices for analytics of learning organisations and systems (PDF). With one proviso – it is heavily US-focused, esp at >institution level. (NOTE that the authors consider Learning Analytics and Educational Data Mining to belong in a single bucket. My own grip on the distinction between the two is still quite shaky – perhaps discussion for another post)

I appreciate the importance of such “territorial” subject definitions, especially in such an emerging field, with the potential of being kidnapped by educational economic efficiency agenda prevailing these days. However, having had an experience of running courses within HE institutions, I feel that student experience and learning can be equally impacted by BOTH, the institutional administration processes/policy decisions AND the quality of teaching,course design and content. So I believe that joined up thinking across analytics “solutions” at all the scales should really be the holy grail here (however unrealistic;). After all there is much overlap in how the same data can be used at the different scales already. For that reason I like the idea of unified concept of Educational Data Sciences, with 4 subfields, as proposed by Piety, Hickey and Bishop in Educational data sciences – framing emergent practices for analytics of learning organisations and systems (PDF). With one proviso – it is heavily US-focused, esp at >institution level. (NOTE that the authors consider Learning Analytics and Educational Data Mining to belong in a single bucket. My own grip on the distinction between the two is still quite shaky – perhaps discussion for another post)

I would not like to commit myself to a revised LA definition yet – I shall return to it at the end of the course (should I survive that long) to try to integrate all the tasty tidbits I collect on the way.

I would not like to commit myself to a revised LA definition yet – I shall return to it at the end of the course (should I survive that long) to try to integrate all the tasty tidbits I collect on the way.

Hmm – what was the assignment for week 1 again? Ah – the LA software spreadsheet….oops better get onto adding some bits to that:)

Headline image source: Flickr by Pat Dalton under CC license.